10 BREAKTHROUGH TECHNOLOGIES 2020

Dueling neural networks. Artificial embryos. AI in the cloud. Welcome to our annual list of the 10 technology advances we think will shape the way we work and live now and for years to come.

Every year since 2001 we’ve picked what we call the 10 Breakthrough Technologies. People often ask, what exactly do you mean by “breakthrough”? It’s a reasonable question—some of our picks haven’t yet reached widespread use, while others may be on the cusp of becoming commercially available. What we’re really looking for is a technology, or perhaps even a collection of technologies, that will have a profound effect on our lives.

For this year, a new technique in artificial intelligence called GANs is giving machines imagination; artificial embryos, despite some thorny ethical constraints, are redefining how life can be created and are opening a research window into the early moments of a human life; and a pilot plant in the heart of Texas’s petrochemical industry is attempting to create completely clean power from natural gas—probably a major energy source for the foreseeable future. These and the rest of our list will be worth keeping an eye on. —The Editors

This story is part of our March/April 2018 Issue

See the rest of the issueSubscribe

3-D Metal Printing

DEREK BRAHNEY

While 3-D printing has been around for decades, it has remained largely in the domain of hobbyists and designers producing one-off prototypes. And printing objects with anything other than plastics—in particular, metal—has been expensive and painfully slow.

Now, however, it’s becoming cheap and easy enough to be a potentially practical way of manufacturing parts. If widely adopted, it could change the way we mass-produce many products.

In the short term, manufacturers wouldn’t need to maintain large inventories—they could simply print an object, such as a replacement part for an aging car, whenever someone needs it.

In the longer term, large factories that mass-produce a limited range of parts might be replaced by smaller ones that make a wider variety, adapting to customers’ changing needs.

The technology can create lighter, stronger parts, and complex shapes that aren’t possible with conventional metal fabrication methods. It can also provide more precise control of the microstructure of metals. In 2017, researchers from the Lawrence Livermore National Laboratory announced they had developed a 3-D-printing method for creating stainless-steel parts twice as strong as traditionally made ones.

Also in 2017, 3-D-printing company Markforged, a small startup based outside Boston, released the first 3-D metal printer for under $100,000.

Another Boston-area startup, Desktop Metal, began to ship its first metal prototyping machines in December 2017. It plans to begin selling larger machines, designed for manufacturing, that are 100 times faster than older metal printing methods.

The printing of metal parts is also getting easier. Desktop Metal now offers software that generates designs ready for 3-D printing. Users tell the program the specs of the object they want to print, and the software produces a computer model suitable for printing.

GE, which has long been a proponent of using 3-D printing in its aviation products (see “10 Breakthrough Technologies of 2013: Additive Manufacturing”), has a test version of its new metal printer that is fast enough to make large parts. The company plans to begin selling the printer in 2018. —Erin Winick

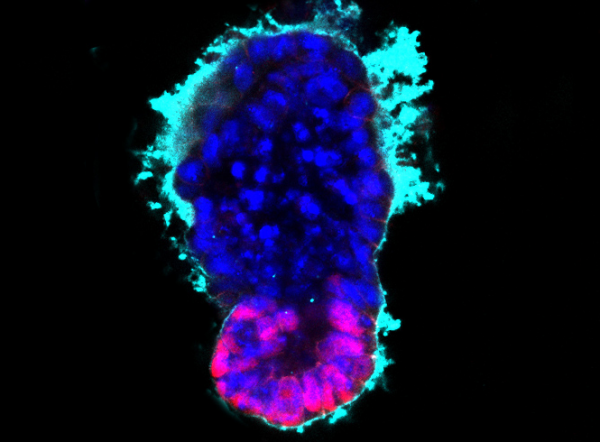

Artificial Embryos

UNIVERSITY OF CAMBRIDGE

In a breakthrough that redefines how life can be created, embryologists working at the University of Cambridge in the UK have grown realistic-looking mouse embryos using only stem cells. No egg. No sperm. Just cells plucked from another embryo.

The researchers placed the cells carefully in a three-dimensional scaffold and watched, fascinated, as they started communicating and lining up into the distinctive bullet shape of a mouse embryo several days old.

“We know that stem cells are magical in their powerful potential of what they can do. We did not realize they could self-organize so beautifully or perfectly,” Magdelena Zernicka-Goetz, who headed the team, told an interviewer at the time.

Zernicka-Goetz says her “synthetic” embryos probably couldn’t have grown into mice. Nonetheless, they’re a hint that soon we could have mammals born without an egg at all.

That isn’t Zernicka-Goetz’s goal. She wants to study how the cells of an early embryo begin taking on their specialized roles. The next step, she says, is to make an artificial embryo out of human stem cells, work that’s being pursued at the University of Michigan and Rockefeller University.

Synthetic human embryos would be a boon to scientists, letting them tease apart events early in development. And since such embryos start with easily manipulated stem cells, labs will be able to employ a full range of tools, such as gene editing, to investigate them as they grow.

Artificial embryos, however, pose ethical questions. What if they turn out to be indistinguishable from real embryos? How long can they be grown in the lab before they feel pain? We need to address those questions before the science races ahead much further, bioethicists say. —Antonio Regalado

Sensing City

SIDEWALK TORONTO

Numerous smart-city schemes have run into delays, dialed down their ambitious goals, or priced out everyone except the super-wealthy. A new project in Toronto, called Quayside, is hoping to change that pattern of failures by rethinking an urban neighborhood from the ground up and rebuilding it around the latest digital technologies.

Alphabet’s Sidewalk Labs, based in New York City, is collaborating with the Canadian government on the high-tech project, slated for Toronto’s industrial waterfront.

One of the project’s goals is to base decisions about design, policy, and technology on information from an extensive network of sensors that gather data on everything from air quality to noise levels to people’s activities.

The plan calls for all vehicles to be autonomous and shared. Robots will roam underground doing menial chores like delivering the mail. Sidewalk Labs says it will open access to the software and systems it’s creating so other companies can build services on top of them, much as people build apps for mobile phones.

The company intends to closely monitor public infrastructure, and this has raised concerns about data governance and privacy. But Sidewalk Labs believes it can work with the community and the local government to alleviate those worries.

“What’s distinctive about what we’re trying to do in Quayside is that the project is not only extraordinarily ambitious but also has a certain amount of humility,” says Rit Aggarwala, the executive in charge of Sidewalk Labs’ urban-systems planning. That humility may help Quayside avoid the pitfalls that have plagued previous smart-city initiatives.

Other North American cities are already clamoring to be next on Sidewalk Labs’ list, according to Waterfront Toronto, the public agency overseeing Quayside’s development. “San Francisco, Denver, Los Angeles, and Boston have all called asking for introductions,” says the agency’s CEO, Will Fleissig. —Elizabeth Woyke

AI for Everybody

MIGUEL PORLAN

Artificial intelligence has so far been mainly the plaything of big tech companies like Amazon, Baidu, Google, and Microsoft, as well as some startups. For many other companies and parts of the economy, AI systems are too expensive and too difficult to implement fully.

What’s the solution? Machine-learning tools based in the cloud are bringing AI to a far broader audience. So far, Amazon dominates cloud AI with its AWS subsidiary. Google is challenging that with TensorFlow, an open-source AI library that can be used to build other machine-learning software. Recently Google announced Cloud AutoML, a suite of pre-trained systems that could make AI simpler to use.

Microsoft, which has its own AI-powered cloud platform, Azure, is teaming up with Amazon to offer Gluon, an open-source deep-learning library. Gluon is supposed to make building neural nets—a key technology in AI that crudely mimics how the human brain learns—as easy as building a smartphone app.

It is uncertain which of these companies will become the leader in offering AI cloud services. But it is a huge business opportunity for the winners.

These products will be essential if the AI revolution is going to spread more broadly through different parts of the economy.

Currently AI is used mostly in the tech industry, where it has created efficiencies and produced new products and services. But many other businesses and industries have struggled to take advantage of the advances in artificial intelligence. Sectors such as medicine, manufacturing, and energy could also be transformed if they were able to implement the technology more fully, with a huge boost to economic productivity.

Most companies, though, still don’t have enough people who know how to use cloud AI. So Amazon and Google are also setting up consultancy services. Once the cloud puts the technology within the reach of almost everyone, the real AI revolution can begin.

—Jackie Snow

Note: A course era paid course for AI for every one by Andrew Ng to get you start on AI(Artificial Intelligence)

—Jackie Snow

Note: A course era paid course for AI for every one by Andrew Ng to get you start on AI(Artificial Intelligence)

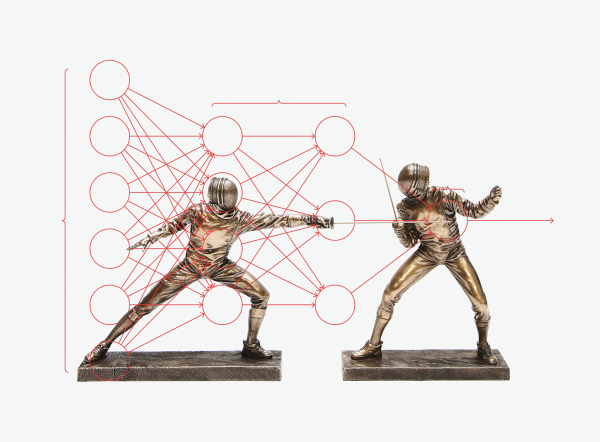

Dueling Neural Networks

ILLUSTRATION BY DEREK BRAHNEY | DIAGRAM COURTESY OF MICHAEL NIELSEN, “NEURAL NETWORKS AND DEEP LEARNING”, DETERMINATION PRESS, 2015

Artificial intelligence is getting very good at identifying things: show it a million pictures, and it can tell you with uncanny accuracy which ones depict a pedestrian crossing a street. But AI is hopeless at generating images of pedestrians by itself. If it could do that, it would be able to create gobs of realistic but synthetic pictures depicting pedestrians in various settings, which a self-driving car could use to train itself without ever going out on the road.

The problem is, creating something entirely new requires imagination—and until now that has perplexed AIs.

The solution first occurred to Ian Goodfellow, then a PhD student at the University of Montreal, during an academic argument in a bar in 2014. The approach, known as a generative adversarial network, or GAN, takes two neural networks—the simplified mathematical models of the human brain that underpin most modern machine learning—and pits them against each other in a digital cat-and-mouse game.

Both networks are trained on the same data set. One, known as the generator, is tasked with creating variations on images it’s already seen—perhaps a picture of a pedestrian with an extra arm. The second, known as the discriminator, is asked to identify whether the example it sees is like the images it has been trained on or a fake produced by the generator—basically, is that three-armed person likely to be real?

Over time, the generator can become so good at producing images that the discriminator can’t spot fakes. Essentially, the generator has been taught to recognize, and then create, realistic-looking images of pedestrians.

The technology has become one of the most promising advances in AI in the past decade, able to help machines produce results that fool even humans.

GANs have been put to use creating realistic-sounding speech and photorealistic fake imagery. In one compelling example, researchers from chipmaker Nvidia primed a GAN with celebrity photographs to create hundreds of credible faces of people who don’t exist. Another research group made not-unconvincing fake paintings that look like the works of van Gogh. Pushed further, GANs can reimagine images in different ways—making a sunny road appear snowy, or turning horses into zebras.

The results aren’t always perfect: GANs can conjure up bicycles with two sets of handlebars, say, or faces with eyebrows in the wrong place. But because the images and sounds are often startlingly realistic, some experts believe there’s a sense in which GANs are beginning to understand the underlying structure of the world they see and hear. And that means AI may gain, along with a sense of imagination, a more independent ability to make sense of what it sees in the world. —Jamie Condliffe

Babel-Fish Earbuds

GOOGLE

In the cult sci-fi classic The Hitchhiker’s Guide to the Galaxy, you slide a yellow Babel fish into your ear to get translations in an instant. In the real world, Google has come up with an interim solution: a $159 pair of earbuds, called Pixel Buds. These work with its Pixel smartphones and Google Translate app to produce practically real-time translation.

One person wears the earbuds, while the other holds a phone. The earbud wearer speaks in his or her language—English is the default—and the app translates the talking and plays it aloud on the phone. The person holding the phone responds; this response is translated and played through the earbuds.

Google Translate already has a conversation feature, and its iOS and Android apps let two users speak as it automatically figures out what languages they’re using and then translates them. But background noise can make it hard for the app to understand what people are saying, and also to figure out when one person has stopped speaking and it’s time to start translating.

Pixel Buds get around these problems because the wearer taps and holds a finger on the right earbud while talking. Splitting the interaction between the phone and the earbuds gives each person control of a microphone and helps the speakers maintain eye contact, since they’re not trying to pass a phone back and forth.

The Pixel Buds were widely panned for subpar design. They do look silly, and they may not fit well in your ears. They can also be hard to set up with a phone.

Clunky hardware can be fixed, though. Pixel Buds show the promise of mutually intelligible communication between languages in close to real time. And no fish required. —Rachel Metz

Zero-Carbon Natural Gas

MIGUEL PORLAN

The world is probably stuck with natural gas as one of our primary sources of electricity for the foreseeable future. Cheap and readily available, it now accounts for more than 30 percent of US electricity and 22 percent of world electricity. And although it’s cleaner than coal, it’s still a massive source of carbon emissions.

A pilot power plant just outside Houston, in the heart of the US petroleum and refining industry, is testing a technology that could make clean energy from natural gas a reality. The company behind the 50-megawatt project, Net Power, believes it can generate power at least as cheaply as standard natural-gas plants and capture essentially all the carbon dioxide released in the process.

If so, it would mean the world has a way to produce carbon-free energy from a fossil fuel at a reasonable cost. Such natural-gas plants could be cranked up and down on demand, avoiding the high capital costs of nuclear power and sidestepping the unsteady supply that renewables generally provide.

Net Power is a collaboration between technology development firm 8 Rivers Capital, Exelon Generation, and energy construction firm CB&I. The company is in the process of commissioning the plant and has begun initial testing. It intends to release results from early evaluations in the months ahead.

The plant puts the carbon dioxide released from burning natural gas under high pressure and heat, using the resulting supercritical CO2 as the “working fluid” that drives a specially built turbine. Much of the carbon dioxide can be continuously recycled; the rest can be captured cheaply.

A key part of pushing down the costs depends on selling that carbon dioxide. Today the main use is in helping to extract oil from petroleum wells. That’s a limited market, and not a particularly green one. Eventually, however, Net Power hopes to see growing demand for carbon dioxide in cement manufacturing and in making plastics and other carbon-based materials.

Net Power’s technology won’t solve all the problems with natural gas, particularly on the extraction side. But as long as we’re using natural gas, we might as well use it as cleanly as possible. Of all the clean-energy technologies in development, Net Power’s is one of the furthest along to promise more than a marginal advance in cutting carbon emissions. —James Temple

Perfect Online Privacy

MIGUEL PORLAN

True internet privacy could finally become possible thanks to a new tool that can—for instance—let you prove you’re over 18 without revealing your date of birth, or prove you have enough money in the bank for a financial transaction without revealing your balance or other details. That limits the risk of a privacy breach or identity theft.

The tool is an emerging cryptographic protocol called a zero-knowledge proof. Though researchers have worked on it for decades, interest has exploded in the past year, thanks in part to the growing obsession with cryptocurrencies, most of which aren’t private.

Much of the credit for a practical zero-knowledge proof goes to Zcash, a digital currency that launched in late 2016. Zcash’s developers used a method called a zk-SNARK (for “zero-knowledge succinct non-interactive argument of knowledge”) to give users the power to transact anonymously.

That’s not normally possible in Bitcoin and most other public blockchain systems, in which transactions are visible to everyone. Though these transactions are theoretically anonymous, they can be combined with other data to track and even identify users. Vitalik Buterin, creator of Ethereum, the world’s second-most-popular blockchain network, has described zk-SNARKs as an “absolutely game-changing technology.”

For banks, this could be a way to use blockchains in payment systems without sacrificing their clients’ privacy. Last year, JPMorgan Chase added zk-SNARKs to its own blockchain-based payment system.

For all their promise, though, zk-SNARKs are computation-heavy and slow. They also require a so-called “trusted setup,” creating a cryptographic key that could compromise the whole system if it fell into the wrong hands. But researchers are looking at alternatives that deploy zero-knowledge proofs more efficiently and don’t require such a key. —Mike Orcutt

Genetic Fortune-Telling

DEREK BRAHNEY

One day, babies will get DNA report cards at birth. These reports will offer predictions about their chances of suffering a heart attack or cancer, of getting hooked on tobacco, and of being smarter than average.

The science making these report cards possible has suddenly arrived, thanks to huge genetic studies—some involving more than a million people.

It turns out that most common diseases and many behaviors and traits, including intelligence, are a result of not one or a few genes but many acting in concert. Using the data from large ongoing genetic studies, scientists are creating what they call “polygenic risk scores.”

Though the new DNA tests offer probabilities, not diagnoses, they could greatly benefit medicine. For example, if women at high risk for breast cancer got more mammograms and those at low risk got fewer, those exams might catch more real cancers and set off fewer false alarms.

Pharmaceutical companies can also use the scores in clinical trials of preventive drugs for such illnesses as Alzheimer’s or heart disease. By picking volunteers who are more likely to get sick, they can more accurately test how well the drugs work.

The trouble is, the predictions are far from perfect. Who wants to know they might develop Alzheimer’s? What if someone with a low risk score for cancer puts off being screened, and then develops cancer anyway?

Polygenic scores are also controversial because they can predict any trait, not only diseases. For instance, they can now forecast about 10 percent of a person’s performance on IQ tests. As the scores improve, it’s likely that DNA IQ predictions will become routinely available. But how will parents and educators use that information?

To behavioral geneticist Eric Turkheimer, the chance that genetic data will be used for both good and bad is what makes the new technology “simultaneously exciting and alarming.” —Antonio Regalado

ghfh